AI in software development can help, but don't let it lead

Here’s our honest answer: sometimes it can, sometimes just partially, sometimes not even close. AI often falls short in understanding people, context, and complexity. It’s great at generating suggestions, but not so great at handling uncertainty, conflict, or nuance.

We understand the value of artificial intelligence like no other, as we help our clients integrate AI into their solutions or develop AI tools to provide tangible business results, without the hype and overpromises.

So when you’re building software that actually works for people in the real world, you need more than algorithms. You need real experts. Here’s why that still matters more than ever.

Why AI can’t manage a project team

Can’t AI just run the project for us? Not quite. While AI can be a powerful assistant, it’s still a long way from managing a software development team, if ever.

Let’s bring this into the real world and look at the often-occurring scenario from a project manager’s workday.

It’s 9-ish AM, and your project manager is already on their second coffee, kicking off the day with a sync call. A developer is blocked on an integration issue, the designer needs clarity on client feedback, and a stakeholder just sent an email titled “URGENT.” By 11 AM, the PM is reshuffling priorities, adjusting the sprint board, and preparing for a demo later that day.

At noon, there’s a quick call with the client, who wants a new feature added before next month’s launch. The PM listens to, probes for what’s behind the ask, and negotiates a compromise that keeps everyone happy. Later, they coach a junior dev through scope creep frustration, rally the team to hit an end-of-day milestone, and send out a progress update that strikes the perfect balance between optimism and realism.

Now, imagine asking AI to do all of that.

What project managers actually do

Project managers are strategists, communicators, negotiators, and emotional anchors. They:

- Define scope and manage shifting priorities

- Serve as the bridge between clients, leadership, and technical teams

- Lead standups, retros, and roadmap planning

- Resolve team conflicts before they escalate

- Balance deadlines with sanity

- Adapt plans in real time (because things almost never go exactly to the plan)

None of this is plug-and-play, but situational and human.

How AI tools help Globaldev with project management

AI can certainly support the complexity of project management, but only from the sidelines.

Many project managers, including ours, now utilize AI-powered meeting transcription tools that capture conversations and spit out neat summaries suggesting who should do what next. This kind of automation can let PMs skip the tedious note-taking to focus on more urgent or strategic tasks, while still giving them reliable documentation (without the usual layers of bureaucracy).

Add to that AI’s ability to create templates, break down and prioritize tasks, automate reporting (if you integrate the tools right), or suggest resource allocations based on availability, and you get a useful assistant. But helpful isn’t the same as being capable of replacing the judgment, adaptability, and human insight a real project manager brings to the table.

Some things you just can’t automate

Where AI consistently falls short is in the sometimes unpredictable reality of real-world collaboration. Most AI tools expect clean, complete, and structured data to do their job well enough, but let’s be honest, that’s rarely the case in actual projects. Market demands can change so much more often now, and that influences the course line of the project, and changes priorities, sometimes adding or removing features.

Another important thing is the structure of the software development team. In hybrid setups like team extension, for instance, where half the team is on our side and half resides in the client’s office, there are just too many moving parts. We can try to uphold best practices on our side, but we can’t control the completeness of data coming from outside systems or siloed processes. And when AI is fed outdated, incomplete, or biased information, it often fumbles, confidently delivering bad advice that can throw a project off course.

Time and task estimation is another place where things get tricky. Sure, AI tools can generate schedules and give optimistic timelines. But the moment the scope shifts or a requirement changes mid-sprint, those beautifully color-coded timelines fall apart. AI can’t adapt to new realities, re-align with shifting goals, or judge whether a team’s overwhelmed or just in deep focus — it simply doesn’t have enough context.

Even in theory, handing more control to AI in project management leads to awkward questions. Who’s it going to talk to when a stakeholder pushes back? An avatar? At the moment, AI avatars are pretty good at simpler things, but ask them to negotiate a scope change or pick up on a client’s hesitations, and it’s like sending Clippy to run your quarterly roadmap call. Future versions might learn to do more, but that’ll require constant access to a firehose of high-quality, real-time data. And even then, how do you teach an algorithm to sense team morale or interpret subtle tension in a call?

That’s why, at Globaldev, our project managers remain essential. They don’t just track timelines, but anticipate problems, discern the unspoken, build trust, and adapt on the fly. They know when to push, when to pause, and how to keep everyone rowing in the same direction, even when the waters get rough. We’re happy to use AI where it adds value. But leadership? That stays human.

Business analysis requires reading the room, not just data

The role of a business analyst, aka BA, in software development is far more than just writing requirements. BAs are deeply embedded in every stage of the development lifecycle.

The true scope of a business analyst’s role

Business analysts are responsible for gathering and clarifying business needs, translating them into functional documentation, and ensuring the final product actually solves a real problem.

This involves everything from fit-gap analysis and backlog prioritization to planning and leading refinement sessions, writing user guides, and helping manage change requests. They work hand-in-hand with developers, product owners, and stakeholders, not only to communicate goals, but to negotiate them. They also test system usability, uncover edge cases, and continuously learn and adapt as the product evolves.

A good BA brings not just technical understanding, but also empathy, curiosity, and sharp problem-solving instincts. They’re investigators, translators, facilitators, and decision-makers all at once.

Where AI adds value for business analysts

AI can definitely assist with the more repetitive or research-heavy parts of the BA role. Some of our most experienced analysts use customized AI setups as part of their daily toolkit. They’ll feed large documentation sets into a model to identify inconsistencies, ask AI to suggest acceptance criteria based on past projects, or summarize stakeholder meeting transcripts to save time.

Used well, AI becomes a junior employee: it helps with the busywork, boosts productivity, and surfaces insights that might otherwise take a lengthy time to dig up. It’s particularly useful for version control, document comparison, and early-stage brainstorming. However, it still absolutely requires close supervision. As one BA on our team put it: “I treat AI like a strong intern. Sometimes it does great. Other times, I have to correct it a lot”.

The gaps AI just can’t fill

For all its promise, AI struggles with the core of business analysis: nuance. It’s common for stakeholders to explore their needs in real time as the project unfolds. Their goals are fuzzy, their feedback is vague, and their constraints change weekly. AI needs clarity to function, but business analysis begins where clarity is lacking.

Public AI tools are also trained on limited and inconsistent examples. Most companies don’t openly publish their best requirement docs (for good reason), which means AI has a hard time learning what “good” even looks like. This leads to vague or misleading outputs, diagrams that don’t make sense, user stories that miss key logic, and suggestions that ignore business context entirely.

In one industry discussion, a few BAs shared how even basic AI-generated transcripts created confusion, with one specialist saying that AI couldn't distinguish between an example, an assumption, and a decision, making the whole document pretty much futile. Others noted how AI can suggest a direction but fail to highlight long-term risks, hidden dependencies, or real-world blockers. And crucially, AI can't “read the room” during a stakeholder interview, detect hesitation, or know when to dig deeper into a vague requirement.

Security is another issue. Feeding sensitive business logic into an external AI tool isn’t always an option. And even internally, there’s always the risk of misinterpretation if the model is trained on limited or outdated data.

Business analysis might be one of the most AI-resistant roles in software, because it’s so high-context. It’s part detective work, part diplomacy, and part translation, decoding vague requests, smoothing out contradictions, and shaping ideas into something that actually works.

Yes, artificial intelligence does speed things up and makes specialists more productive by removing some of the repetitive work. However, it’s still not nearly at the level of doing the whole job by itself.

Globaldev BAs use AI where it makes practical sense, as a second pair of eyes or a copilot. But it is the human specialist that can ask the right questions to gauge valuable insights and build a future-proof plan.

Code generators aren’t developers

A software developer’s job is part engineering, part problem-solving, and part pattern recognition built over years of experience. Their day might start with reviewing open tasks, catching up on Slack threads, and unblocking teammates during stand-up. Then it’s onto long stretches of focused coding, debugging elusive issues, reviewing pull requests, and documenting what was just created, often while fielding messages from QA, product, or clients.

Developers design systems, anticipate edge cases, troubleshoot bugs in production, and continuously improve existing architecture. They translate business logic into efficient solutions, collaborate with BAs to understand what users actually need, and work with QA to make sure it all holds together. It’s logical, yes, but also intuitive, strategic, and creative.

In short, they don’t just build. They think through complexity and choose what (and what not) to build.

The good side of AI in development

Used wisely, AI can be a real time-saver. Many developers, including ours at Globaldev, use AI tools to handle boilerplate code, autocomplete common patterns, generate tests, and even help understand older or unfamiliar tech stacks.

One of our frontend developers put it well: “It’s a great tool if you treat it like a tool. I use it for writing tests, debugging, even finding vulnerabilities, but I always double-check its work”. AI also helps with research, often surfacing documentation or examples faster than a traditional Google search.

Used well, it gives developers a head start. But it’s not hands-free. Think of it like using autocomplete on steroids — it might finish your sentence, but you still need to make sure the sentence makes sense in context.

The catch with AI code

Here’s the problem: AI doesn’t actually understand code; it predicts it. Large Language Models (like GPT) are trained to guess what code should come next based on patterns in massive datasets, not based on your specific business logic or system architecture. So while they can write plausible-looking code, they can also hallucinate functions, introduce subtle bugs, or suggest solutions that fall apart when integrated into a real system.

Developers have seen it all. One moment, AI nails a helper function, the next it’s recommending non-existent libraries or suggesting logic that violates business rules. As one senior developer warned: “The biggest risk is that it always tries to give an answer, even when it shouldn’t. And if a less experienced developer trusts that blindly, vulnerable code can end up in production”.

Accuracy issues, lack of context, and hallucinations are common complaints. Some developers call it “automation without understanding”, like using a map that draws its own roads as you drive. You might end up spending more time verifying or rewriting AI-generated code than if you’d just written it yourself.

There’s also a growing “prompt fatigue” dilemma: the more context AI needs, the longer and more specific the prompt has to be. As one developer put it: “If crafting the prompt takes longer than coding it myself, then what’s the point?”

Building software takes more than code

Modern development isn’t just typing syntax, but knowing when to refactor for scalability, choosing between tradeoffs, and solving real-world problems under constraints. It’s logic and judgment. It’s speed and restraint. And it’s something AI, at least as we know it now, simply can’t replicate.

We use AI as part of our workflow. It’s helpful, sometimes surprisingly so. But we also know that no matter how fast it writes, it can’t make high-stakes decisions or spot a subtle flaw that could sink a sprint. That still takes a human developer with experience, intuition, and the confidence to say: “That looks right, but let me double-check”.

AI is evolving, and its place in development will continue to grow. But for now, it’s still very much a tool.

Designing for the future takes human judgement and strategy, not templates

A software architect is one of the most complex and judgment-heavy roles in tech. They are responsible for shaping the entire structure of a solution, from how its components talk to each other to how it scales under load to how maintainable it’ll be five years down the line.

Architects design system blueprints, select technologies, define coding standards, and collaborate closely with development teams and stakeholders. Moreover, they assess long-term risks, enforce quality and security measures, and make technical decisions grounded in real business needs.

This role requires a deep understanding of the technical landscape, the limitations of existing systems, and the tradeoffs involved in every decision. A stack of best practices can’t offer that. It’s something you develop through years of experience and cross-functional collaboration.

Using Gen AI to speed up the basics

That said, AI has started carving out a supporting role in this space. Architects are using generative AI to speed up documentation, compare tools and libraries, prototype ideas, and even build out proof-of-concepts. These uses can save time and reduce friction in the early phases of decision-making.

However, these contributions are limited to generic, context-free outputs. AI may be able to suggest a technically valid architecture for a basic requirement, but real-world projects rarely exist in isolation. Architects are expected to make decisions that factor in much more than just technical fit — they must also consider the organization’s current tech stack, internal team expertise, business constraints, and long-term maintenance needs.

AI isn’t ready for real-world architecture

Despite the growing buzz, very few teams are seriously relying on AI to design real-world system architecture. And for good reason. There is no way any current AI tool can take on a task like adding a feature to an enterprise platform built over the past eight years with three different authentication methods, undocumented internal APIs, and a list of “known weird things”.

Architects make sense of chaos. They understand how decisions ripple across systems, teams, and timelines. They know that choosing one database over another affects the caching layer, the DevOps pipeline, and even how QA writes their tests. These are trade-offs rooted in real business constraints.

And let’s not forget the human side, because not only do architects lead teams, but they facilitate hard conversations with stakeholders, like sitting down to establish a business model.

Will AI continue to evolve? Of course. But AI just can’t replace the kind of thinking that goes into sustainable, scalable system design. It can’t weigh performance against maintainability, cost against long-term value, or innovation against risk in the same way an experienced human professional can.

Real testing goes beyond test scripts

Quality assurance in software development is far more than just running through a checklist. QA specialists play a critical role in making sure products work reliably, perform under pressure, and feel intuitive to real users.

A large part of the job involves executing tests, which can be manual, automated, and exploratory. Specialists might spend part of the day doing API or load testing, diving into logs, reproducing bugs, and validating fixes. But QA doesn’t stop at confirming that something works, they need to understand how things might break and ensure that issues are caught before users ever see them.

Beyond hands-on testing, QA engineers handle requirement analysis, risk assessment, and bug documentation. They pinpoint gaps or contradictions early in the process and help the team avoid costly rework later. When bugs are found, QAs don’t just log them, but clearly describe what went wrong, how to reproduce it, and why it matters.

How AI supports quality assurance now

AI is proving to be genuinely helpful in QA when used the right way. Our specialists at Globaldev use tools like GPTs, Copilot, Cursor, and Postbot to help with repetitive or complex tasks. AI can assist with quickly drafting test cases, summarizing messy requirements, analyzing stack traces, and even writing or extending test automation.

When time is tight, dropping a feature description into GPT to get a list of possible checks or edge cases can be a real time-saver. For bugs, AI can help phrase reports more clearly or explain backend errors. In some cases, it helps decode logs or give quick overviews of legacy code. It’s also helpful in exploratory analysis, spotting logical issues in documentation, or suggesting additional test flows that may not be obvious at first glance.

Used responsibly, AI helps QAs move faster and focus on critical thinking, analysis, and communication. But there are limits.

AI isn’t enough for real testing

While AI can expedite testing support, it doesn’t do any testing on its own. It doesn’t click buttons, explore interfaces, or navigate user flows. It doesn’t understand how a product feels to use, or why a technically functional interface might still be frustrating. Furthermore, it can suggest test cases, but it doesn’t know your users, your business logic, or your industry.

AI also can’t handle the subtleties of regulated environments, like healthcare or finance, where domain knowledge, compliance awareness, and risk evaluation are critical. For example, AI might miss errors in medical dosage logic simply because it doesn’t understand clinical best practices.

Context remains a big challenge. AI won’t know the quirks of your internal systems or how your team handles versioning, roles, or integrations. Its answers are often generic or, worse, wrong, because it lacks the full picture. That’s why all AI-generated outputs still need to be reviewed carefully, especially when the stakes are high.

And finally, QA isn’t just about the testing itself. QA specialists talk to developers, product managers, and stakeholders all the time. They explain what’s broken, why it matters, and how it could affect users or business outcomes. Such back-and-forths take people skills.

Customer-centric design requires humans

Designers are the people who ask: “Will the user understand clicking here instead of there?”, “Did this screen make someone feel confused, rushed, or frustrated?”, and a hundred other questions, then stay up at night trying to bring everything to intuitive perfection.

Their job goes far beyond arranging buttons and picking fonts. UX/UI designers are problem-solvers, researchers, psychologists, writers, strategists, and defenders of common sense. They start with messy questions: Who are our users? What do they really need? Why is this step confusing? And they dig deep, through interviews, analytics, user sessions, and feedback, to find the real answers.

They sketch ideas, map journeys, wireframe flows, and test everything with real people, not assumptions. If something doesn’t work, they tweak it. If it still doesn’t work, they throw it out and try again. Great design is iteration, curiosity, and a constant loop of listening and adapting.

On top of that, all of this happens within constraints: tight timelines, stakeholder preferences, technical limits, accessibility standards, and business goals. The designer’s job is to balance all of it and still make the experience feel smooth, logical, and even delightful.

Things AI can help with in UI/UX

AI tools can be genuinely useful in some design workflow cases. Designers use AI to generate quick layout ideas, summarize research findings, assist with UX writing (like giving various options for a shorter text on the button), and even create basic prototypes. It can speed things up, especially in the early stages of ideation, or help kickstart a blank page when the creative engine stalls.

In simpler use cases, AI can reduce repetitive work or surface ideas from previous design patterns that might be useful. For entry-level tasks or time-crunched moments, it may become a useful co-designer for getting something rough on the page.

The blind spots in machine-made experiences

AI faces fundamental barriers in replicating core design competencies. It cannot understand human emotions and cultural contexts deeply, the qualities essential for empathetic design. Current AI tools operate by pattern matching from existing data, producing homogeneous, template-driven solutions that lack nuance and creativity. Many AI-generated designs are becoming increasingly similar: flat, repetitive, and missing emotional resonance. That’s because AI can only work with existing patterns, but the best UX insights come from talking directly to users.

Moreover, a lot of design projects involve enhancing existing, complex systems rather than creating new applications. This requires understanding established design systems and following numerous undocumented patterns — the contextual knowledge that AI tools currently lack.

Every user group has specific needs and behavioral patterns that cannot be generalized from broad datasets. Effective design requires understanding these unique characteristics through direct interaction. The unspoken, intuitive communication that comprises the majority of UX work cannot yet be systematized. This includes reading between the lines during user interviews and recognizing when users' stated needs differ from their actual behaviors.

Customer-centric design requires people because it fundamentally involves understanding behavior, emotions, and needs. Only designers with lived experience can truly empathize with users, read subtle social cues, and create experiences that resonate with real-world contexts.

Human-centered software needs human-centered teams

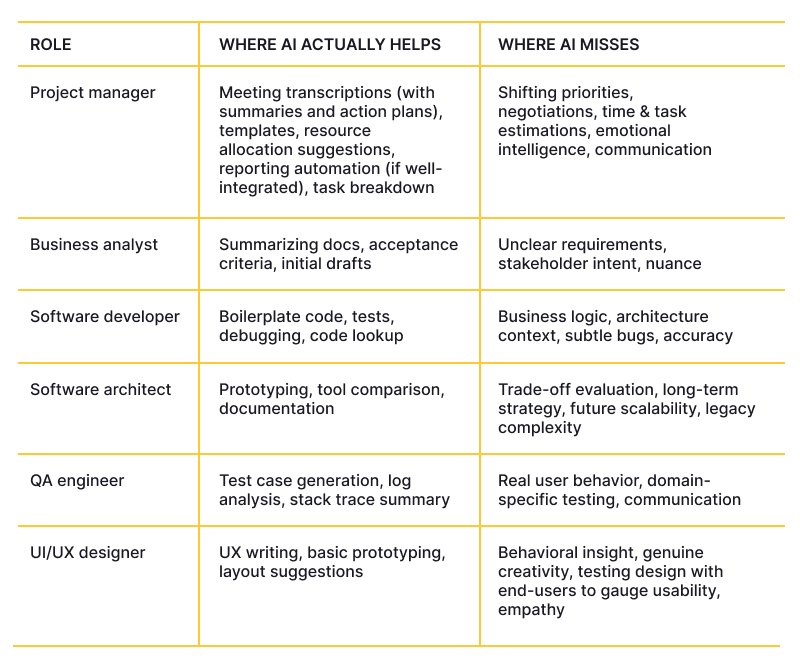

For the TLDR crowd, we prepared a brief summary of our findings (after talking to specialists in- and outside Globaldev) regarding where AI can meaningfully and practically help throughout development, and where it usually fails.

AI has earned its place at the table — it can speed up tasks, surface patterns, and support specialists in meaningful ways. At Globaldev, we use it exactly for that: as a tool. Not as a decision-maker. Not as a replacement. Because the work of building great software is bigger than automation.

From BAs reading between the lines in stakeholder interviews to QAs catching invisible cracks before users ever see them, from designers who shape experiences around real emotions to architects who balance every constraint and edge case, software development is full of judgment calls. Emotional intelligence. Lateral thinking. The ability to notice when something feels off.

These are things no machine, however sophisticated, can do on its own. So yes, we’ll keep using AI to help us go faster. But we’ll keep relying on people to make sure we’re headed in the right direction. Because if you want software that’s not just functional right now, but sustainable, scalable, and user-driven, you still need a team that can think, feel, and adapt.

We build with smart tools, proven workflows, and a human-first mindset, keeping your users, your business goals, and the future in focus. If that’s the kind of thinking you’re looking for to build your software from scratch or get a few experts to fill the knowledge or resource gaps, let’s connect!